Unity based AI agent development is a pivotal field in modern game creation, allowing for the design of non-player characters (NPCs) or adversaries that exhibit intelligent behavior, interact dynamically, and respond adaptively to both the environment and the player. A solid grasp of these techniques can dramatically enhance the overall gaming experience for any project.

What is a Unity based AI agent?

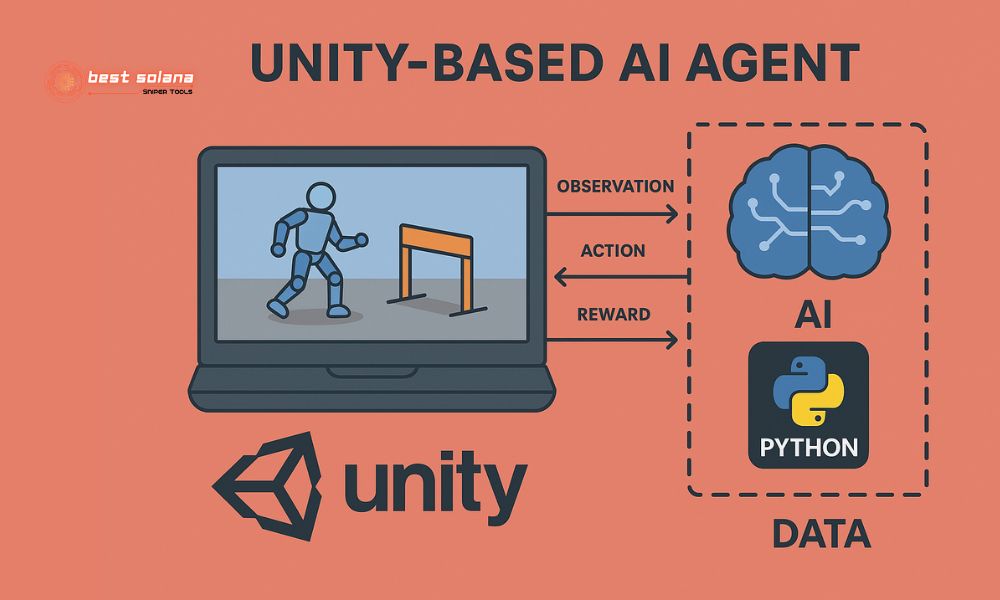

A unity based AI agent is an in-game entity programmed to perceive its surrounding environment, process that information, and make autonomous decisions to act. This can range from simple behaviors like following a predefined path to complex actions such as tactical combat, pathfinding, or social interactions with other agents and the player.

Unity provides a rich set of tools and APIs to support AI development, from its built-in navigation system to extensive scripting capabilities. Crafting a believable unity based AI agent is key to immersive gameplay.

Core components of a Unity based AI agent

To build an effective unity based AI agent in Unity, we need to understand its main constituent parts:

Perception: This is the agent’s ability to gather information from the environment. In Unity, this is often achieved through:

- Raycasting and Spherecasting: To detect obstacles, enemies, or objects of interest within sight.

- Trigger Colliders: To recognize when other objects enter or exit a specific area.

- Auditory Perception: Simulating the ability to hear sounds from various sources.

Decision Making: After gathering information, the agent needs to process it and decide what to do next. Common techniques include:

- Finite State Machines (FSMs): A simple yet effective model where the agent has a set of states (e.g., patrol, chase, attack, flee) and conditions for transitioning between them.

- Behavior Trees (BTs): A hierarchical, tree-like structure that allows for more complex and modular behaviors than FSMs. BTs include nodes like Sequence, Selector, Decorator, and Action.

- Utility AI: A system that evaluates and selects actions based on the “utility score” of each potential action in the current situation.

Action: After deciding, the agent performs that action in the game. Examples include:

- Moving to a location.

- Attacking a target.

- Using an ability.

- Interacting with an object.

Tools supporting Unity based AI agent development

Unity offers many built-in tools and features to simplify AI development:

Navigation System (NavMesh): This is one of Unity’s most powerful tools for AI. The NavMesh system allows you to “bake” a navigation mesh onto the static surfaces in your scene. Subsequently, your unity based AI agent can use the NavMeshAgent component to automatically find the shortest path and avoid obstacles intelligently.

- NavMeshAgent: A component attached to the AI agent so it can move on the NavMesh. You can adjust speed, acceleration, stopping distance, etc.

- NavMeshObstacle: A component used for dynamic obstacles, helping NavMeshAgents avoid them in real-time.

Scripting API (C#): The C# programming language is the heart of Unity, allowing you to customize and build complex AI logic from scratch or extend existing systems.

Unity ML-Agents Toolkit: For those looking to explore machine learning-based AI, ML-Agents is a fantastic framework. It allows you to train a unity based AI agent through reinforcement learning or imitation learning, creating incredibly complex and adaptive behaviors.

Asset Store: The Unity Asset Store contains countless paid and free AI assets, from complete Behavior Tree systems to advanced pathfinding solutions, significantly speeding up the development process.

Basic process to create a simple Unity based AI agent

Let’s consider a simple example of creating an NPC capable of patrolling between points:

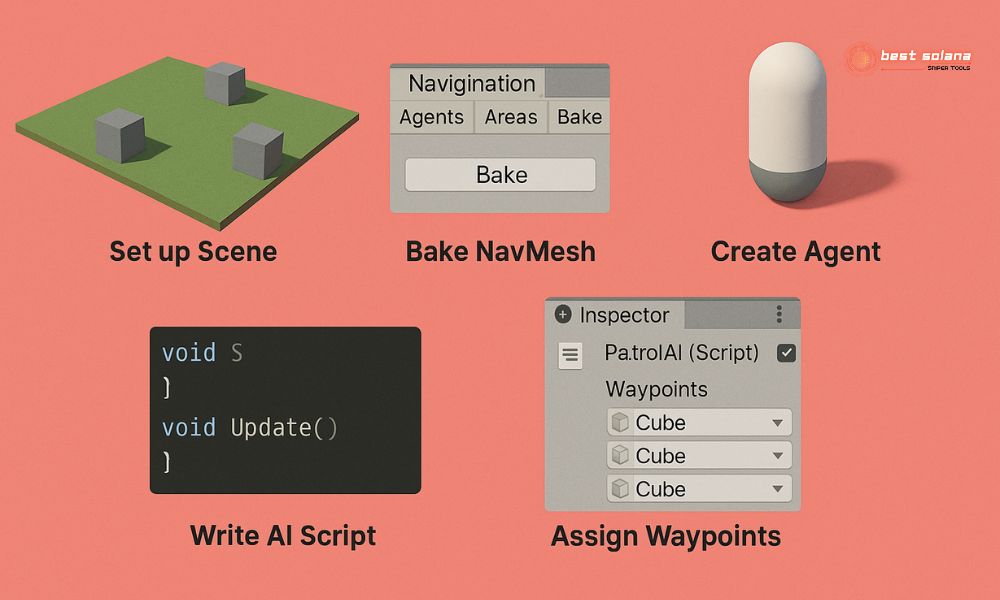

- Set up Scene: Create a Plane for the ground and a few Cube objects as patrol waypoints.

- Bake NavMesh: In the Navigation window (Window > AI > Navigation), select the Bake tab and click Bake to create the NavMesh for the ground.

- Create Agent: Create a GameObject (e.g., a Capsule) to represent the AI agent. Add a NavMeshAgent component to it.

- Write AI Script: Create a new C# script (e.g., PatrolAI) and attach it to the agent.

- Assign Waypoints: In the agent’s Inspector, drag the Cube objects (waypoints) into the Waypoints array of the PatrolAI script.

When you run the game, you’ll see the agent automatically move sequentially through the defined points. This is a very basic example, but it illustrates how a unity based ai agent utilizes NavMesh for movement.

Challenges and future directions

Developing a unity based ai agent is not always straightforward. Some challenges include:

Performance: Complex AI can consume significant CPU resources, especially with many agents active simultaneously. Optimization is crucial.

Believability: Making AI behave naturally and credibly, rather than robotically, requires finesse in design.

Debugging: Finding errors in complex AI logic can be time-consuming. Unity provides debugging tools, but designing AI modularly and testably is very helpful.

In the future, we can expect even more robust AI development in Unity, especially with advancements in machine learning and tools like ML-Agents, paving the way for creating increasingly intelligent and adaptive unity based ai agents.

Developing a unity based ai agent is an exciting journey, opening up countless creative possibilities for game developers. Unity provides a solid foundation and powerful tools to realize the most complex AI ideas. To discover more knowledge, tips, and in-depth guides, don’t forget to follow our Best Solana Sniper.